I was recently asked to write an online review for a business. I agreed since I had good things to say and wanted to help them with their effort to expand to a new location. They asked me to share the review with them before posting it to make sure a few keywords were added. This would help with their SEO (search engine optimization) online. Knowing the practical importance of things like SEO for small businesses, I also agreed to this request.

When I shared the review, a staff member immediately sent me a completely new draft of the text that was obviously filtered through ChatGPT or a similar large-language model. He said that this new draft captured the spirit of my review, but included multiple keywords and phrases identified by their SEO research. He also told me that one of my sentences in the review was too colloquial and might not be understood by potential customers. Therefore, easily digestible language was necessary to dispel any confusion. Besides being radically different from my original draft, the prose of the new AI-generated review was immaculate: no typos, no grammatical errors, no semantic ambiguity.

It also sounded exactly like what it was: AI-generated. No style, no personality, no originality. After agreeing to the previous stipulations for the review, I had to draw a line here. I explained that I could tell that the new “draft” of my review was filtered through ChatGPT and that I have a personal policy of never using AI-generated or processed text in any writing under my name. The staff member responded that it didn’t actually matter much what was in the review; all the business needed right now was a large number of reviews with a plethora of keywords and phrases that their SEO research deemed necessary.

I informed him that he could look elsewhere for reviewers.

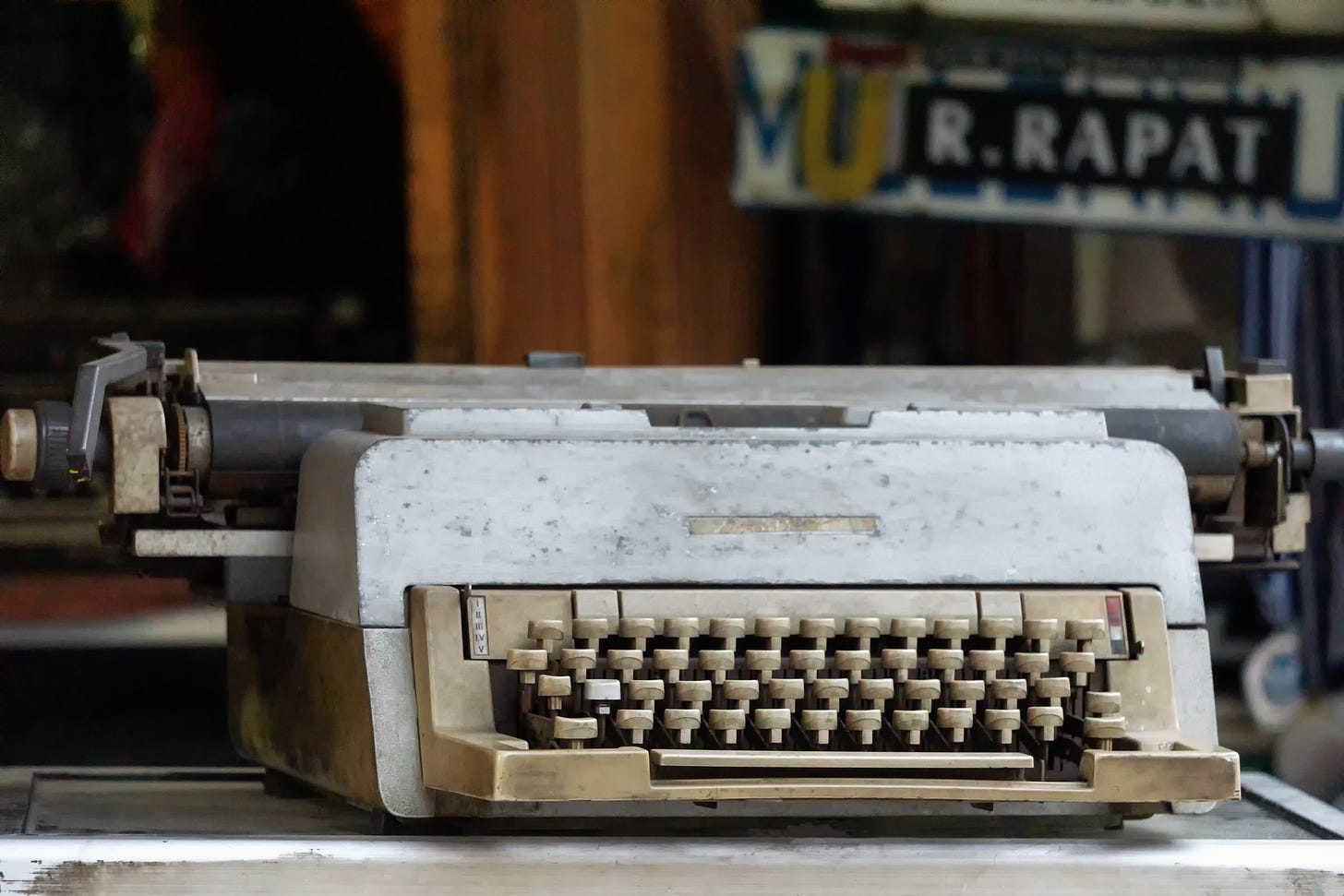

When I do archival research, I deal with materials that are handwritten or typed on a typewriter. Letters, diary entries, and drafts of speeches often bear the traces of human imperfection: a misspelling here, a crossed-out word there, and a host of other relics of the pre-digital past. These traces are a testament to the circuitous thought process that stands behind each document.

Everyday writing became much more sanitized in the digital age thanks to the advent of word processing software, email applications, and other technologies that entirely remove these traces. The backspace key, spell checkers, and automatically-generated word suggestions come to mind. A messy human thought process still exists behind the final product, but the traces of this product can now be hidden from view. From this perspective, AI-generated text is the next step in the sanitization of digital writing. But this time the thought process is not only disguised; it is eradicated.

In his important piece, “Automatic Against the People: Reading, Writing, and AI,” Jason Read asks “what is lost when we automate the acts of reading and writing.” His answer is human thought itself, because writing, as a process, is a form of thinking. When we outsource things like reading and writing to ChatGPT, we lose “skills that are integral to what it means to be a human being, to be able to think and make sense of the world.”

We also miss the fundamental point of certain genres of writing. Read reports that

Google recently ran an advertisement for the Gemini AI system in which a father used the software to write a letter that his young daughter could send to an Olympic athlete who inspired her. As Ted Chiang has argued, it is unclear what exactly is being sold here, the point of such a letter is not to be eloquent or well written, the point is that the child has written it. It is a singular expression. I would go further than Chiang here, however, and argue that what is lost when we automate something, even writing a fan letter, is learning how to even begin to articulate and know what [it] is that we know or think in the first place.

Something similar could be said about business reviews. The primary purpose of a positive review is to offer a “singular expression” of appreciation. Writing such a review also gives the writer a chance to reflect on the meaning of that business in his or her life and what exactly it is that makes it special. The words that comprise the review are nothing less than the traces of human thought directed along these lines.

However, in the digital era, the objective demands of technologies like SEO render this subjective process obsolete. As

argues, the economy of online reviews fundamentally serves to “legitimate the platform” on which the reviews are written, to “make it seem like a normal and trustable venue for commerce.” The now ubiquitous AI-generated review is but an extension of this logic.I used to see typos, grammatical errors, and semantic ambiguities as signs of laziness or unprofessional writing. After the mass rollout of ChatGPT, my view has changed. What were once careless mistakes now feel like welcome reminders of the writer’s humanity. The inverse is also true. When I encounter flawless prose, the first question that comes to mind is whether it was generated by AI. Writing that appears too perfect feels bereft of the human qualities that bespeak a thought process behind the prose.

Humanity has, at least to some extent, traditionally been defined by imperfection. This is especially true in (but certainly not limited to) cultures influenced by Christianity. Against this backdrop, valorizing the perfection of AI-generated writing over the imperfection of human writing evokes the age-old Gnostic heresy: the belief that there is no distinction between the Creation and the Fall. The material world of illness, impoverishment, and imperfection is evil. Neo-Gnostics, in turn, view AI—understood as a transcendent spiritual force standing above humanity—as a welcome savior.

I try and avoid typos at Handful of Earth. I like to present polished work and maintain a professional standard for my readers. Despite my best efforts, occasionally typos and other traces of the imperfect and unfinished business of human thought slip by. In the age of ChatGPT, maybe that’s not such a bad thing.

I think I am writing, making images and notes for these machines to learn. I am a machine trainer.